We call it AI as long as it does not work!

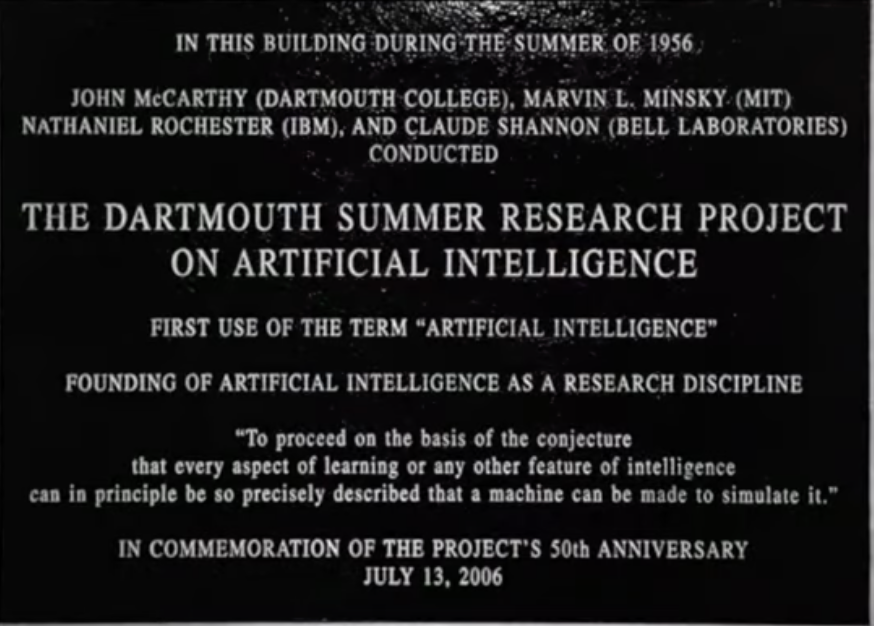

In August 1955, a group of scientists made a funding request for US$13,500 ($150k adjusted for inflation) to host a summer workshop at Dartmouth College, New Hampshire. The field they proposed to explore was artificial intelligence (AI).

While the funding request was humble, the conjecture of the researchers was not:

“Every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it”.

The ambitious goal was to build artificial intelligence software to make the military operate more efficiently. It did not go particular well, it was pretty primitive computing. Eliza originates from this effort.

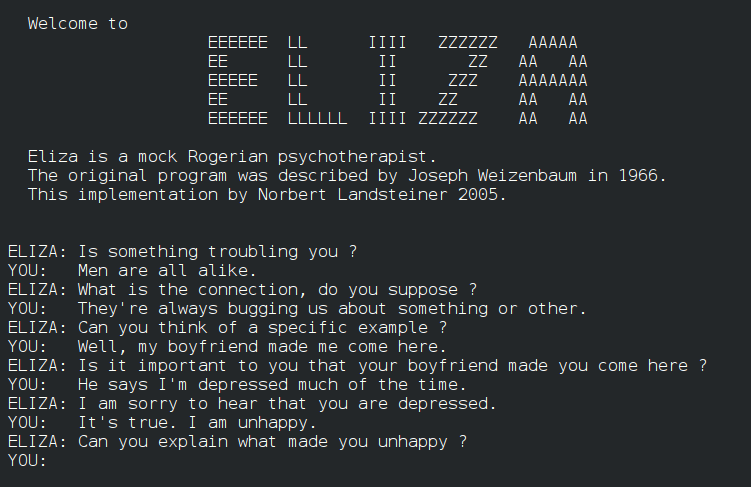

Eliza is a natural language processing computer program created by MIT professor Joseph Weizenbaum between 1964 and 1966. It was one of the first chatbots ever created and was designed to mimic conversation with a therapist, based on Rogerian psychotherapy.

To create Eliza, Weizenbaum used pattern matching and substitution techniques to create a program that could recognize certain phrases and respond with appropriate responses. The program was designed to take input in the form of natural language phrases and respond with a message that seemed to be generated by a human therapist.

Eliza was not designed to be a sophisticated chatbot, but rather a simple program that could demonstrate the potential for computers to communicate with humans in a more natural way. Despite its simplicity, Eliza was successful in creating the illusion of conversation and remains a classic example of natural language processing.

People tend to project intelligence against anything that can chat.

What does 'AI' actually mean?

AI stands for artificial intelligence. Artificial intelligence refers to the ability of a machine or computer system to perform tasks that typically require human-like intelligence, such as understanding language, recognizing images, making decisions, and solving problems.

There are different types of AI, ranging from simple rule-based systems to more complex ones that can learn and adapt to new situations. Some examples of AI include self-driving cars, language translation systems, and virtual assistants like Siri or Alexa.

AI is a rapidly growing field, with new developments and applications being discovered all the time. It has the potential to transform many industries and has already had a significant impact on fields such as healthcare, finance, and transportation.

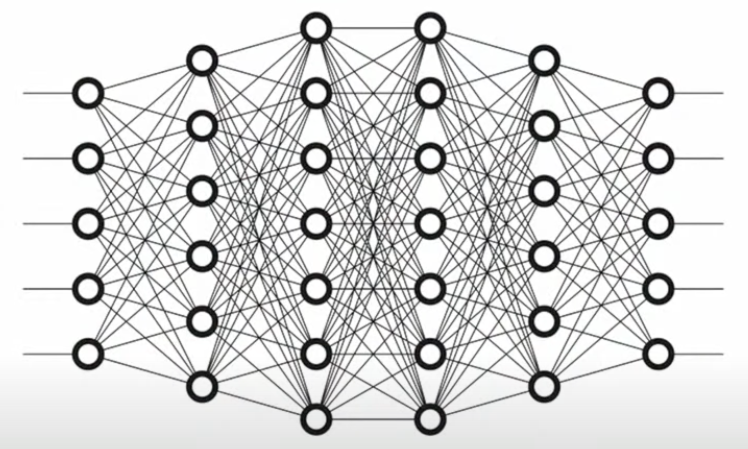

Neural networks and deep learning are related technologies that are often used in the field of artificial intelligence (AI).

A neural network is a type of machine learning model that is inspired by the structure and function of the human brain. It consists of layers of interconnected “neurons,” which process and transmit information. Each neuron receives input from other neurons and uses that input to calculate and output a result. Neural networks can learn and adapt to new data, making them well-suited for tasks such as image and speech recognition.

Deep learning is a subfield of machine learning that is inspired by the structure and function of the brain, specifically the neural networks that make up the brain. It involves the use of neural networks with many layers (hence the term “deep”), which can learn and extract features from raw data. Deep learning has been particularly successful in tasks such as image and speech recognition, natural language processing, and machine translation.

Logistics and Planning was once AI

In the late 60s, the US military had this organizational system for moving many materials around in conflict zones that is unrivalled by anyone until now. But it was not called AI. The reality is that artificial intelligence is not used as a term for it. As soon as something works, it gets a real name. It became planning and logistics.

The story continued throughout the 70s, 80s, 90s until now.

In the 70s, an expert system was a computer program that used artificial intelligence techniques, such as rule-based systems and machine learning, to mimic the decision-making abilities of a human expert in a specific domain.

In the 80s, the new and shiny AI trend were industrial robots or automotive robots. These mechanical systems are used in the automotive industry to perform tasks such as welding, painting, and assembly. These robots are typically programmed to perform specific tasks and are not considered to be fully autonomous or capable of exhibiting artificial intelligence.

However, some automotive robots may incorporate elements of AI, such as machine learning or image recognition, to improve their performance or adapt to changing conditions. For example, an automotive robot might be trained to recognize different parts or detect defects in a manufacturing process using machine learning algorithms.

Overall, the use of AI in automotive robots is limited and is typically focused on specific tasks or processes rather than full autonomy.

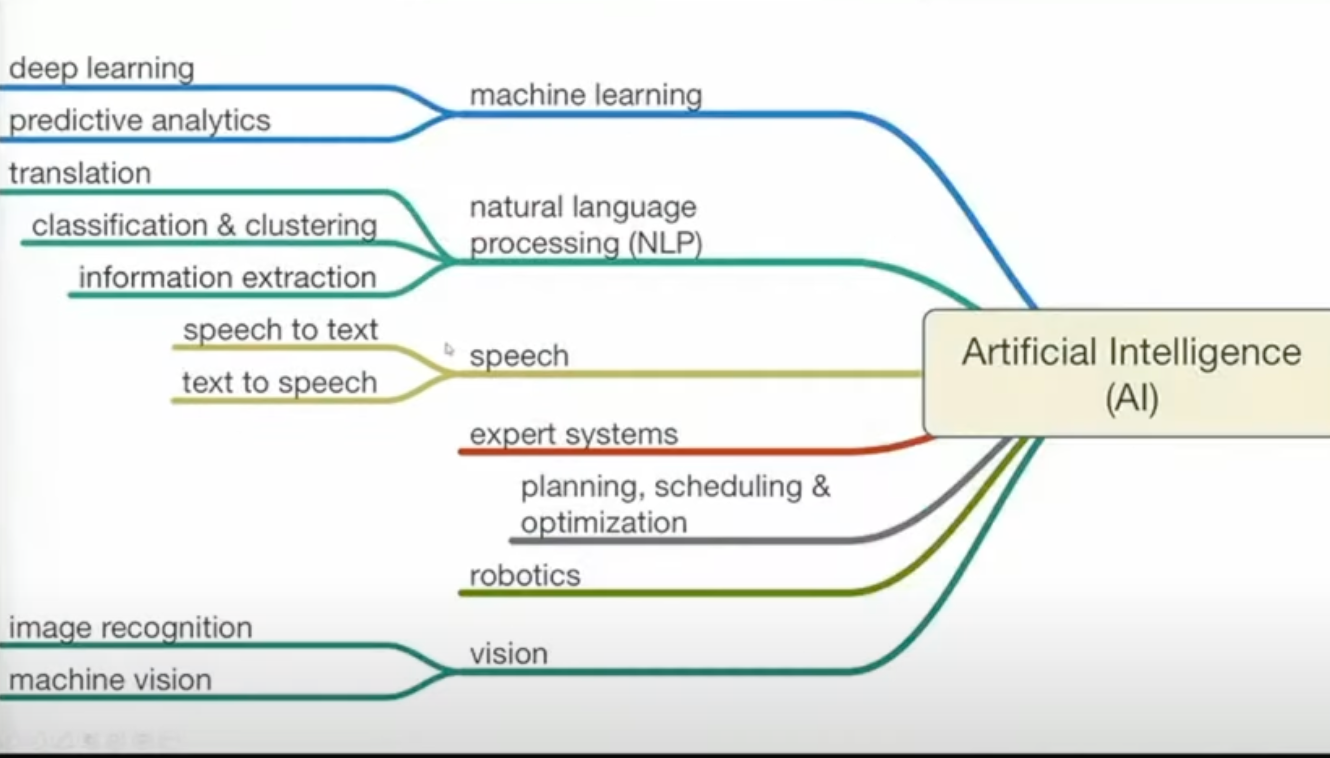

The image above is the best gathering of all these technologies is in this graph. The short lines mean the oldest stuff. The planning, scheduling & optimization is the military stuff from the 1950s. In the 70s, it's the expert systems and robotics, then we have the early speech recognition. And the longest lines are the latest. The figure captures the process made after the first AI workshop. Some of which are heuristics and some of which are neural networks, all of which are broadening the horizon.

Notice all the names that don't say artificial intelligence anymore.

(As artificial intelligence means it doesn't work yet.)

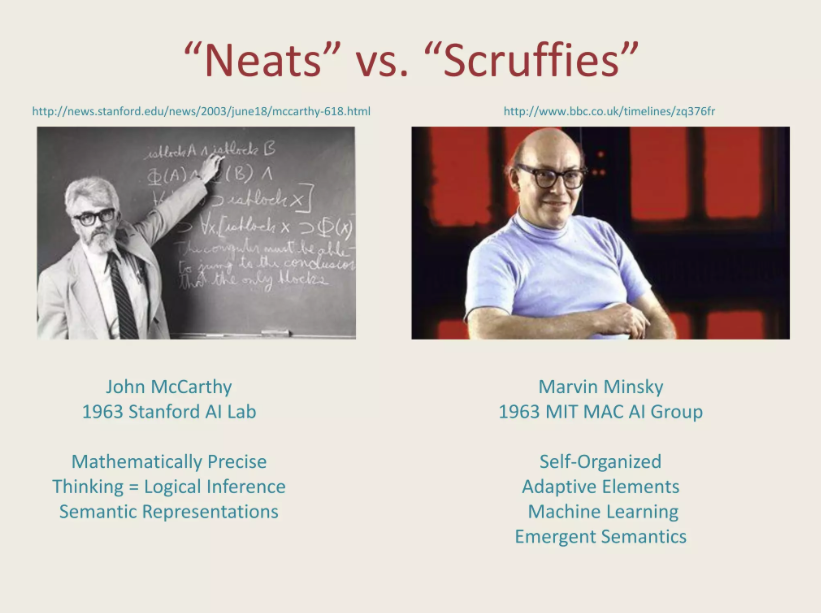

The "Neats" vs. the "Scruffies"

The terms “neats” and “scruffies” are used to describe two different approaches to artificial intelligence (AI) research and development.

The “neats” approach is characterized by a focus on developing AI systems that are precise, logical, and formally defined. This approach emphasizes the use of formal methods and mathematical models to build AI systems that are transparent and easy to understand.

In contrast, the “scruffies” approach is characterized by a more pragmatic and exploratory approach to AI development. This approach is less concerned with formal methods and is more focused on building AI systems that are flexible and adaptable, even if they are less precise or transparent.

The terms “neats” and “scruffies” may have been coined by AI researcher and philosopher David Chapman in the 1980s, and they continue to be used to describe different approaches to AI research and development. While both approaches have their strengths and weaknesses, it is generally believed that a combination of both “neats” and “scruffies” approaches is necessary for the development of effective and robust AI systems.

Both of these approaches emerged out of the 70s and 80s and had technological success going into the 90s.

Deep blue was the ultimate manifestation of the “neats” approach. Deep Blue was a computer program developed by IBM that was designed to play chess. It is notable for being the first computer program to defeat a world champion chess player in a match, when it defeated Garry Kasparov in 1997.

Deep Blue was a highly specialized system that was built specifically for playing chess. It used advanced artificial intelligence techniques, including brute force search algorithms and advanced evaluation functions, to analyze and evaluate chess positions.

Deep Blue was a major milestone in the development of artificial intelligence, and helped to demonstrate the capabilities of computers to perform complex tasks that had previously been thought to require human-level intelligence. The success of Deep Blue also sparked a renewed interest in the field of computer chess and led to the development of many other chess-playing programs.

In the end, heuristic models suffer from the same problem, as the model gets larger and larger you get an “n over n+1” problem. Where it gets so complex that it becomes very costly to continue. So, there are certain classes of problems that work very neatly with this approach and certain that do not work.

Deep Learning Neural Networks

Deep learning is a subfield of machine learning that is inspired by the structure and function of the brain, specifically the neural networks that make up the brain. A neural network is a type of machine learning model that is made up of layers of interconnected nodes, called “neurons.” These neurons process and transmit information through the network, allowing the model to learn and make decisions based on input data.

Deep learning neural networks are particularly powerful because they can learn and model very complex relationships in data. They do this by using multiple layers of interconnected neurons, which allows them to learn and model patterns and relationships at multiple levels of abstraction. This makes them particularly well-suited for tasks like image and speech recognition, natural language processing, and even playing games.

There are many types of deep learning neural networks, including convolutional neural networks (CNNs), recurrent neural networks (RNNs), and long short-term memory (LSTM) networks, among others. Each of these architectures is designed to tackle specific types of problems and is optimized for certain types of input data.

Jeffry Hinton got his PhD in Artificial Intelligence in the 70s and contributed to the success of deep learning models. Though initially, there were computational limitations.

Jeffrey Hinton is a computer scientist and researcher who has made significant contributions to the field of deep learning, a subfield of machine learning that is inspired by the structure and function of the brain.

Hinton's work has had a major impact on the field of artificial intelligence, and has helped to drive the rapid progress that has been made recently in areas like image and speech recognition, natural language processing, and machine translation. He has received numerous awards and honors for his contributions to the field, including the Turing Award, which is widely considered the “Nobel Prize” of computer science.

Hinton is best known for his work on artificial neural networks, which are the building blocks of deep learning systems. In 1986, he developed the backpropagation algorithm, which is a widely used method for training neural networks. He also developed the concept of deep learning and introduced the idea of using deep neural networks for visual object recognition.

Backpropagation is a method for adjusting the neurons in the learning process. It would allow us much more sophisticated models. Many more neurons, many deeper layers. Back in the 1980s ten layer deep networks could be done, but it would take so long nobody will care anymore and so it went back on the shelf again until the 2010s. And it was students of Hinton's that were dusting off his old papers and reviewing these models that said we got a lot more compute now, why don't we try this complex adversarial network approach, but they needed a problem.

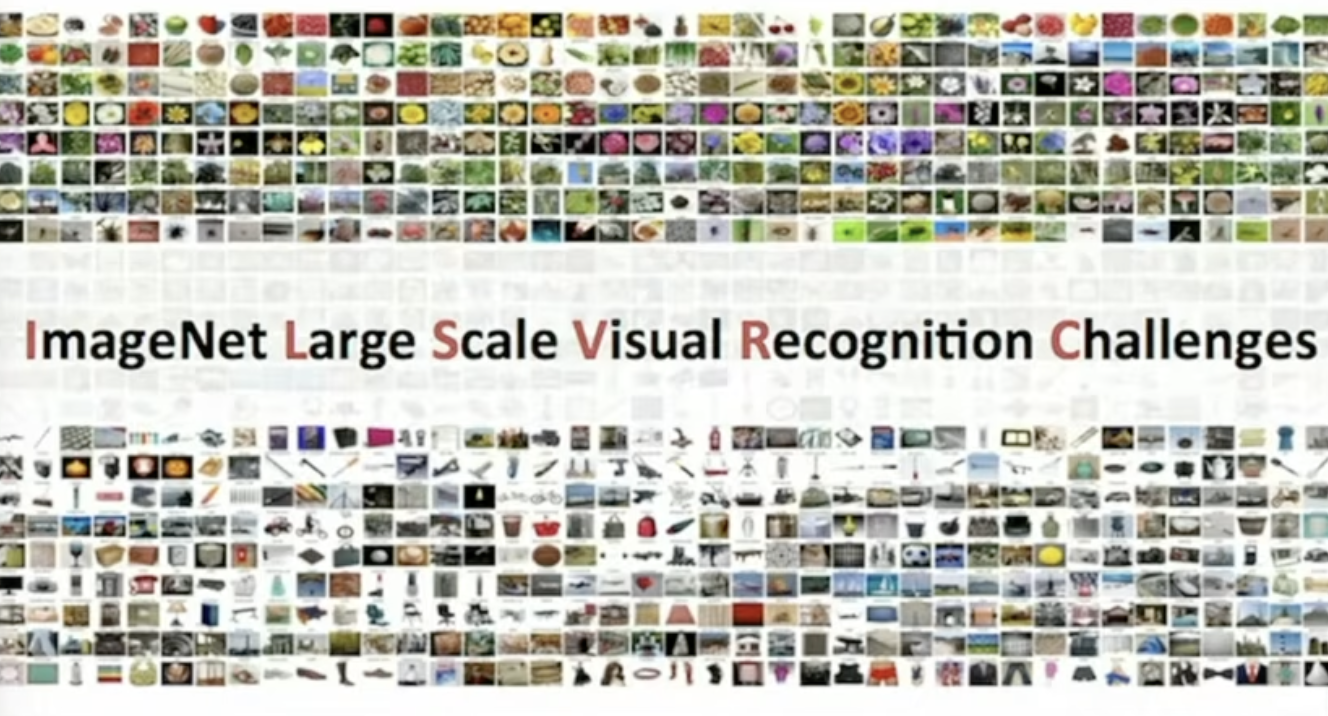

And they found it in a thing called ImageNet. It was developed by researchers at Stanford University in the mid-2000s.

The ImageNet dataset consists of more than 14 million images, drawn from more than 20,000 different classes, including animals, objects, and scenes. Each image is labeled with one or more class labels, and the dataset is divided into a training set, a validation set, and a test set.

Heuristic Image Recognition models were getting into the 70% correct range after a few years of development. And Hinton's students taking an adversarial network approach ran through the data and entered the challenge in 2012 and got 85% first try. And then the next year got 99%. This technology kept spreading and affected all of us ever since.